In modern system architecture, message queues play a crucial role in enabling communication between different components and managing data flow in complex distributed systems.

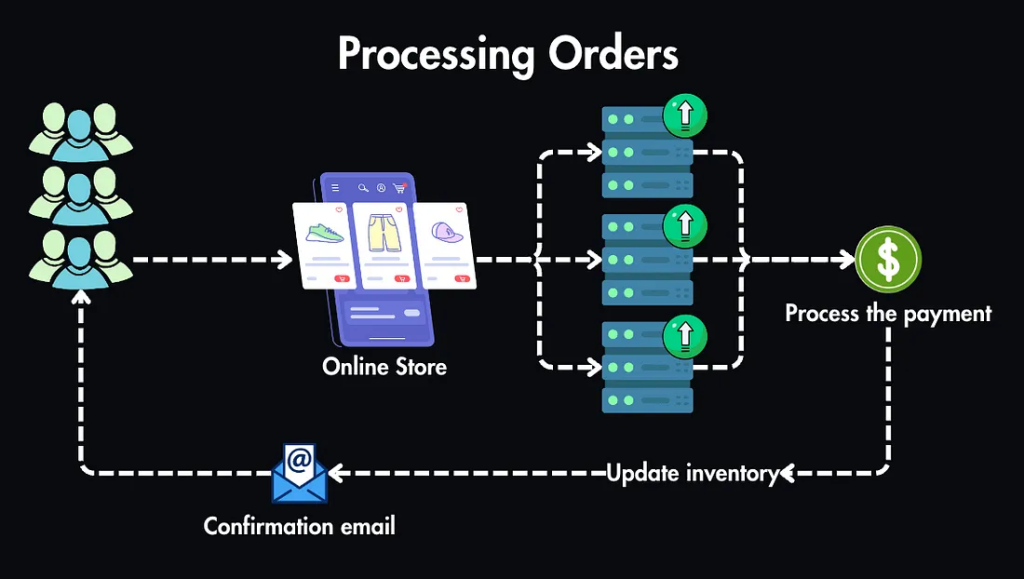

Imagine an online store where, each time a customer places an order, the system must:

- Process the payment,

- Update the inventory,

- Send a confirmation email.

Handling all these tasks immediately, especially during peak times, could slow down the customer experience. Here, we have many events to manage, but trying to process them simultaneously can create a bottleneck.

While we could scale up server resources to handle these demands, it’s often more efficient to queue the tasks and process them sequentially.

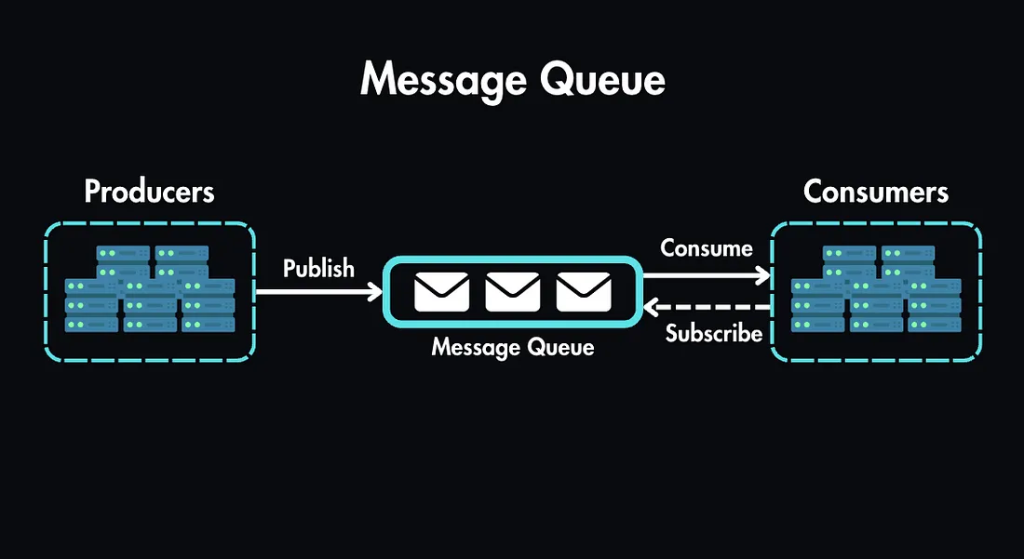

A message queue enables asynchronous communication by acting as a buffer that distributes requests over time. It’s a durable component stored in memory, designed to temporarily hold tasks until they’re ready to be processed.

The basic structure of a message queue is straightforward:

- Producers (or publishers) generate and publish messages to the queue.

- Consumers (or subscribers) connect to the queue to perform actions defined by those messages.

In a real-world setup, multiple applications can publish to a queue, and several servers can read from it.

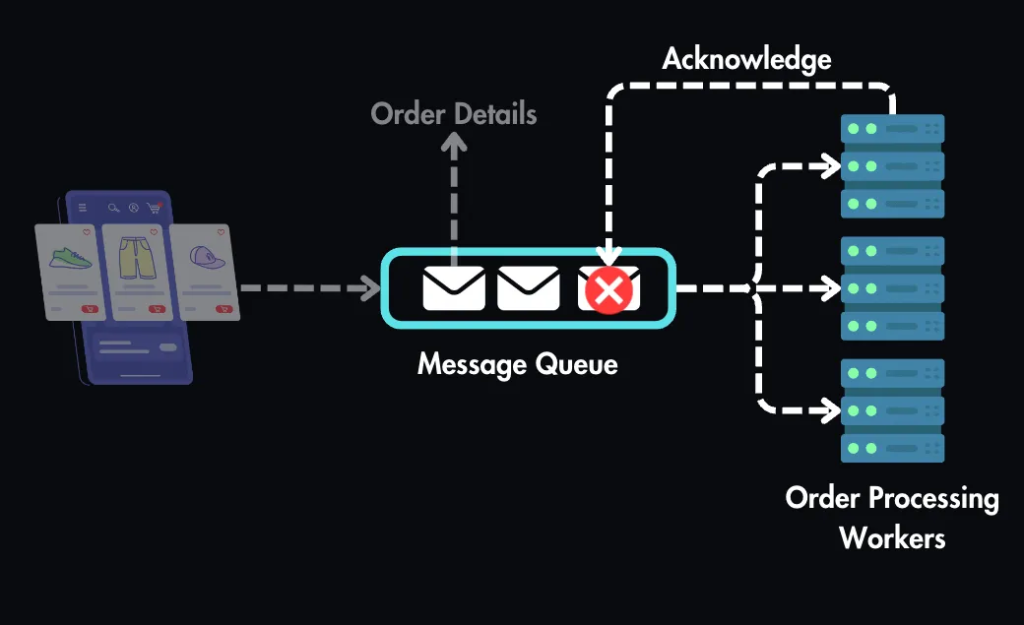

Rather than handling each task immediately, we can add it to the queue to be processed sequentially:

- Order placed: The order details are packaged as a message.

- Message added to queue: This message joins the queue.

- Workers process tasks: Dedicated workers pull messages from the queue in sequence to handle each task.

After processing a message, the server acknowledges it, removing it from the queue so it’s not repeated.

Message queues offer several advantages:

- Asynchronous Processing: Events are decoupled, so tasks don’t need to be handled immediately, allowing for efficient, asynchronous processing.

- High Availability: Producers can post messages even if consumers are temporarily unavailable, and vice versa.

- Durability: Since messages are stored on disk rather than in RAM, data remains safe even if the queue crashes.

- Fault Tolerance: If a worker crashes mid-task, the message remains in the queue, ready to be picked up by another worker.

- Scalability: When there’s a surge in orders, the queue lengthens. Additional workers can be added to manage the load without affecting website performance.

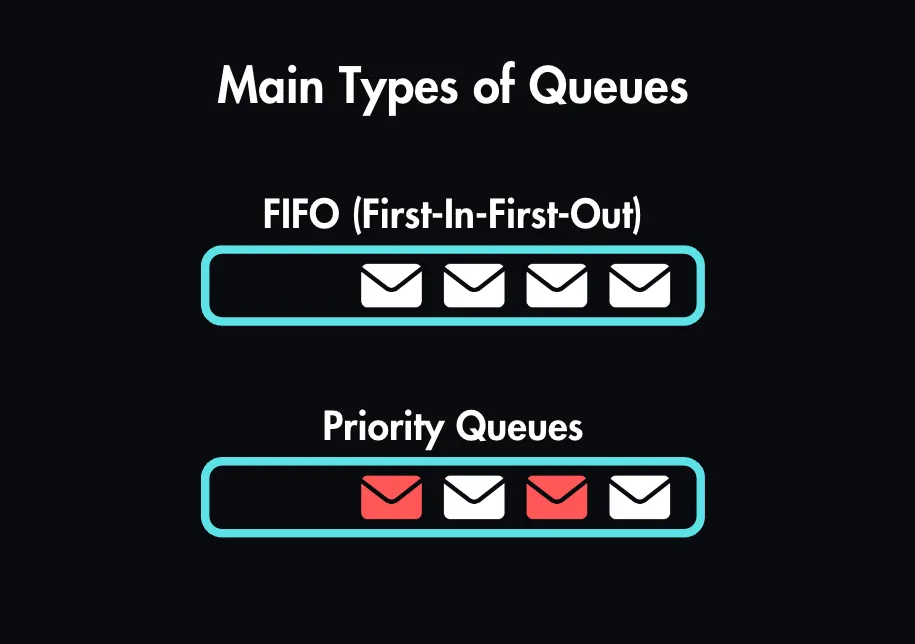

Different queues suit different needs:

- FIFO (First-In-First-Out): Messages are processed in order of arrival, ideal for tasks like payment processing.

- Priority Queues: More critical messages are prioritized, ensuring they’re handled first.

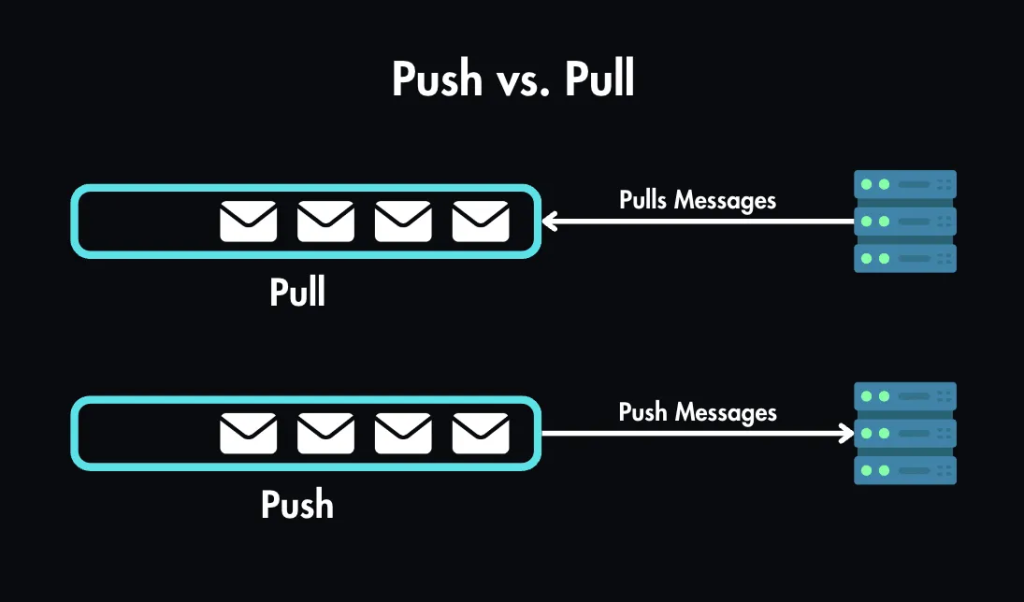

Some queues wait for workers to ask for messages (pull-based queue), while others actively send messages to workers (push-based queue).

Some widely-used message queues include:

- RabbitMQ: A versatile option for many applications.

- Kafka: Designed for high throughput and real-time streaming, suitable for event-driven architectures.

- Amazon SQS (Simple Queue Service): A managed cloud-based solution on AWS, offering features like delay queues and dead-letter queues for additional reliability.

Message queues are fundamental components in distributed system design, enabling scalability, fault tolerance, and efficiency. From handling high-throughput tasks in data streaming to improving system reliability in microservices, they are crucial for modern application architectures. Choosing the right message queue, adhering to best practices, and designing with scalability in mind can help create robust and efficient systems that meet today’s complex requirements.

Happy coding🚀🚀🚀

We are happy to help https://synpass.pro/contactsynpass/ 🤝